Intensive computation research not just for exploding star simulations anymore

By Steve KoppesNews Office

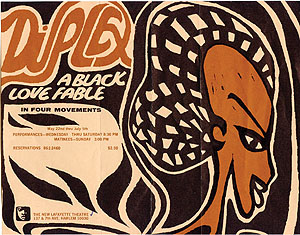

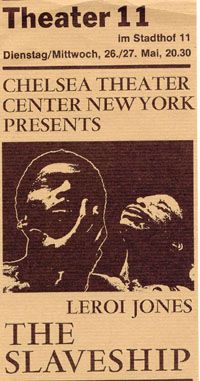

The ARTFL (American and French Research on the Treasury of the French Language) Project has teamed up with Alexander Street Press to create a database for scholars that includes playbills and posters of more than 1,200 works written by black playwrights between 1850 and 2000. It was created using Teraport computing power. Above is a promotional poster for the premiere of Ed Bullins’ Duplex performed in Harlem in 1970, and (directly below) LeRoi Jones’ Slaveship performed at the Chelsea Theater Center in New York in 1968.  | |

Scholars taking an avid interest in computational resources on campus 10 years ago tended to be doing data-intensive simulations of exploding stars at the Center for Astrophysical Thermonuclear Flashes. But the days when their colleagues in the biological sciences, social sciences or the humanities could store and analyze their data on their desktop computers are on the wane.

“We’re seeing exponential growth in the number of people who want to compute,” said Ian Foster, Director of the Computation Institute. “Part of that is we’re hiring people who have the interest. Part of it is that people are facing new problems.”

The University installed a parallel computer called Teraport three years ago to facilitate their work. Teraport consists of 260 processors, each as powerful as a typical desktop computer.

“It’s not enormous, but it’s bigger than any other resource on campus,” Foster said. “What’s interesting is the number of people who are finding it useful and the breadth of demand that we see for it.” To date, nearly 800,000 jobs have run on Teraport, consuming more than 2.5 million hours of computing time, said Rob Gardner, Senior Research Associate in the Computation Institute.

On campus, nearly 120 users currently have access to Teraport. They represent more than a dozen local research groups and their collaborators from other institutions. Among these, 50 users are regular Teraport customers, each submitting more than 100 jobs with an average computing time of three hours.

Additional users have access to Teraport through the Open Science Grid, a national network dedicated to large-scale, computing-intensive research projects.

“Through the Open Science Grid interface, access has been given to 15 different virtual organizations representing hundreds of remote users who collaborate on projects directly affiliated with the Computation Institute or within the OSG Consortium,” Gardner said. “This accounts for roughly half of the usage of Teraport.”

Teraport might be analyzing genetic sequences one day while annotating literary texts the next. James Evans, Assistant Professor in Sociology and the College, uses Teraport for citation network analysis to identify patterns of interaction between universities and the biotechnology industry. The work can occupy up to 30 processors at a time as Teraport compares the citations of every article with those of every other article in his database.

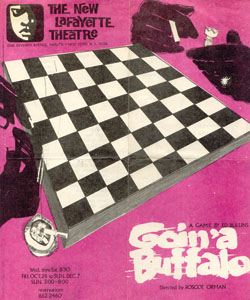

Above is the playbill cover for the premiere of Goin’ a Buffalo by Ed Bullins. The play was performed in 1968 at the New Lafayette Theater in Harlem, New York. | |

In work that requires more computing power, Evans also analyzes the web of authors and organizations producing these documents and the words within them to identify the scientific subfields they address.

Distributed computing was possible at his doctoral institution, Evans said, “but the computers on the network were of different sizes, had slightly different software and sometimes operating systems.” Teraport offers a uniform operating system and software that, combined with other features, has in some cases saved Evans months of computing time, he said.

Last November, the first Chicago Colloquium on Digital Humanities and Computer Science convened to address the question of what scholars will do with a million digitized books. The potential challenges and opportunities presented by such a data mass moved closer to reality in June, when a consortium of 12 universities, including Chicago, announced an agreement to digitize up to 10 million books as part of the Google Book Search Project.

“In digital humanities we will be facing massive amounts of textual material in the next three or four years,” said Mark Olsen, Assistant Director of the Project for ARTFL (American and French Research on the Treasury of the French Language). “There are a number of teams, including the ARTFL Project, which are ramping up to adopt machine-learning technologies on how to handle a million books.”

Olsen and his associates presented three papers at the 2007 Digital Humanities Conference at the University of Illinois in June. One of the three papers described their data-mining project pertaining to gender, race and nationality in black drama from 1850 to 2000.

In collaboration with Alexander Street Press, the ARTFL Project has compiled a database containing more than 1,200 works written by black playwrights since the middle of the 19th century. Scholars can mine this database to examine differences in language use in authors and their characters.

“Initial work on lexical choices in this collection has revealed striking differences in word use and sense between male and female authors and characters, as well as American and non-American authors,” wrote Olsen and his co-authors in their paper on the data mining of black drama.

The amount of computer power that ARTFL’s various projects require of Teraport are probably tiny compared to those from the sciences, Olsen said, but they are critical nevertheless. “Even small tests on our highest-power machines would take 15 or 20 hours to run. These kinds of runs are much faster on the Teraport,” Olsen said. “It extends our capabilities quite a bit.”

![[Chronicle]](/images/sidebar_header_oct06.gif)